Latest News

Digital planning delivers value right where your business needs it

99+%

Product availability

20 – 30%

Reduction in inventory

10 – 30%

Less waste

40 – 90%

Reduction in planning workload

We help companies to:

Realize unrivaled inventory performance to maximize revenue and profitability

ToolsGroup provides industry-leading Multi-Echelon Optimization and Replenishment, an advanced optimization technique that decreases network-wide inventory by as much as 50% while increasing service level performance 5-10 percentage points. Learn more →

Build resilience and prepare for uncertainty with advanced probabilistic planning

With our Probabilistic Forecasting and Probabilistic Inventory Planning, organizations can neutralize uncertainty, improve forecast accuracy by 5-10 percentage points, and calculate optimal stock levels to best achieve service targets. Learn more →

Sense and respond intelligently to demand and supply disruptions

ToolsGroup is a leader in Dynamic Planning, a system that anticipates fluctuations in demand and supply, automatically adjusting inventory and replenishment based on real-time market changes to ensure customer satisfaction.

Guide smarter decisions with AI so planners can focus on big picture value

SO99+ and JustEnough are designed to automate many of the manual tasks that planners face every day. Users experience a 40-90% reduction in planner workload, freeing them up to work on strategic initiatives.

Deliver outstanding customer experiences with real-time visibility across the enterprise

ToolsGroup’s Dynamic Data Unification Platform tracks events from transactional systems in real-time to deliver a digital supply chain twin of demand, supply, inventory and operations. Learn more →

Optimize omnichannel experiences with unified commerce

Dynamic, integrated retail planning and execution solutions deliver connected customer centric experiences across every channel.

Supply chain innovators from Day One

Inertia? Never heard of it.

After 30 years, our momentum has only increased, and we continue to revolutionize retail and supply chain planning technology – and help our customers accelerate their supply chain evolution.

Customer stories

Discover how customers are navigating the unexpected with ToolsGroup.

“We chose ToolsGroup because of its ease of use and the scalability of its applications, which made it easy to invest in and evolve with as our company transforms.”

Francisco Javier Fernández, Supply Chain Director

)

“With ToolsGroup SO99+, we have the AI-driven supply chain planning solutions that allow us to adapt quickly and stay flexible, helping us meet our own business goals while delivering on our promises to customers and strengthening our relationships with suppliers.”

Sarah Voorhees, VP Demand and Inventory Planning

)

“We chose ToolsGroup because they are a leading worldwide provider of retail planning solutions. We wanted to work with a partner that…could teach us what we should be doing, align our bespoke practices, and provide a single source of the truth.”

Jolann Van Dyk, CIO of Kathmandu

)

"Now we can focus on more added-value work because the data crunching doesn’t consume any time on our end."

Ilaria Maruccia, EMEA WG&PGA SIOP Manager

)

"An accurate sales forecast and a lean supply chain allowed us to optimize stock to guarantee product availability in all Nespresso sales channels."

Simone Zaffuto, Supply Chain Manager

)

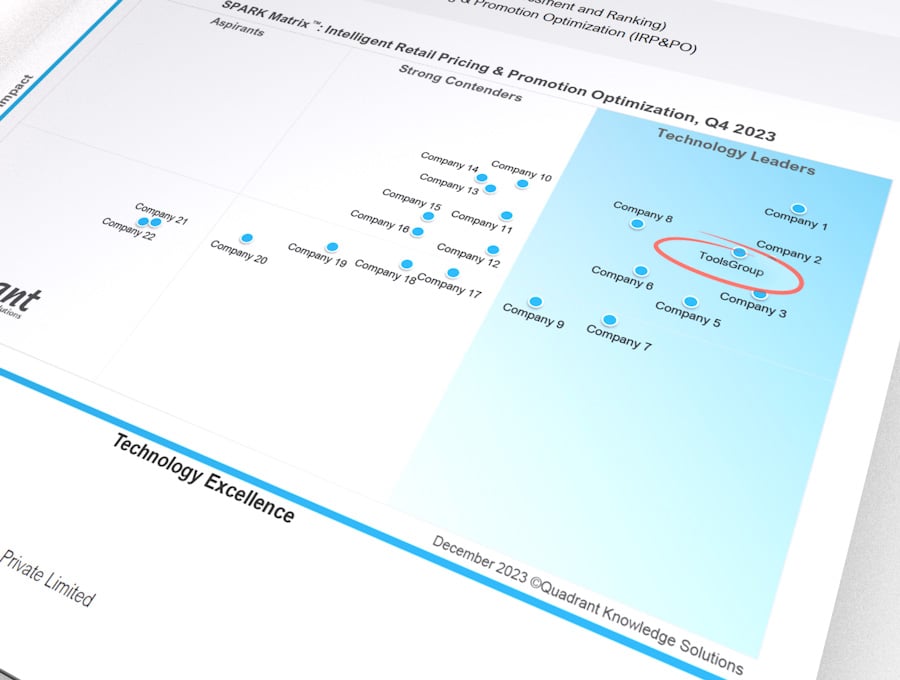

We think you’ll love ToolsGroup, but don’t just take our word for it

Latest insights

We’d love to hear from you

Whether you’re curious about solution features or business benefits—we’re ready to answer any and all questions.

Let’s Chat

![[On-Demand Webinar] Storm on the Horizon [On-Demand Webinar] Storm on the Horizon](https://www.toolsgroup.com/wp-content/uploads/2023/12/Using-Weather-and-Climate-Data-to-Improve-Demand-Forecasting.jpg)